Table Of Content

- Introduction

- Section 1 — The Ethical Imperative in Bharat’s AI Journey

- Why ethics is no longer optional

- The inclusion gap: Bharat’s structural risk

- The structural tension: efficiency vs inclusion

- Table 1.1 – Two models of AI deployment in Bharat

- Inclusion is not softness. It’s stability.

- Evidence: Where the fracture lines already show

- India is uniquely positioned to lead — if it chooses the right frame

- Comparative view: Bharat vs typical Western AI framing

- Table 1.2 – Different centers of gravity

- The ethical imperative, stated clearly

- Section 2 — From Code to Conscience: Redefining Innovation Metrics

- Why Bharat must measure what it values

- The missing measurement: inclusion as infrastructure

- Table 2.1 – The difference between operational success and ethical success

- Why efficiency without empathy fails economically

- The ethical performance framework

- Table 2.2 – The Bharat Ethical Innovation Framework (BEIF)

- Case study: AI in agriculture — inclusion as efficiency

- Reframing innovation KPIs for Bharat

- Key takeaways

- Section 3 — Designing Inclusive AI Systems

- From inclusion as intent to inclusion as infrastructure

- What makes an AI system inclusive?

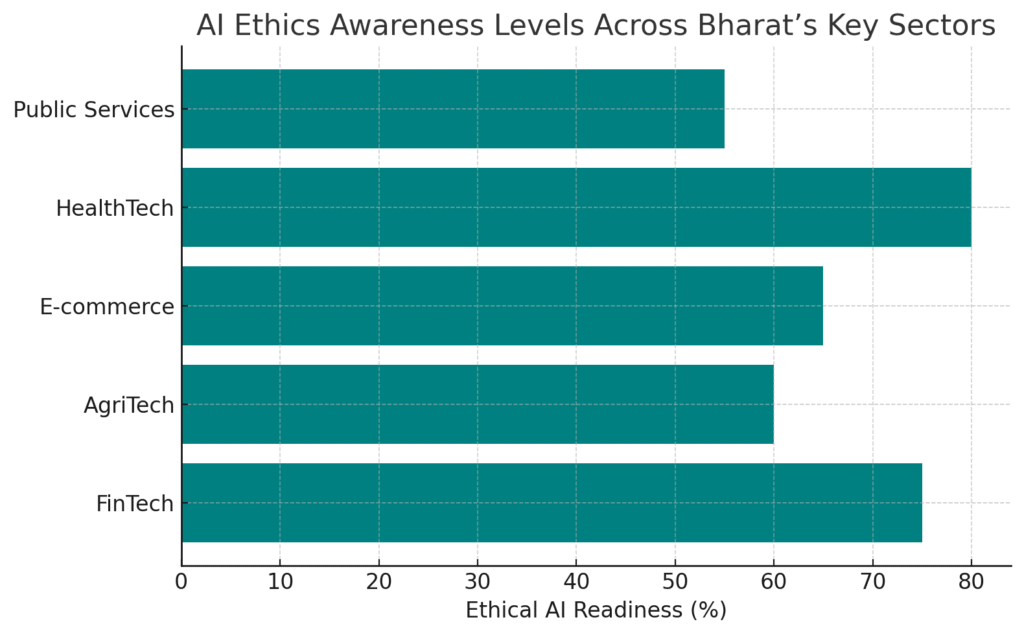

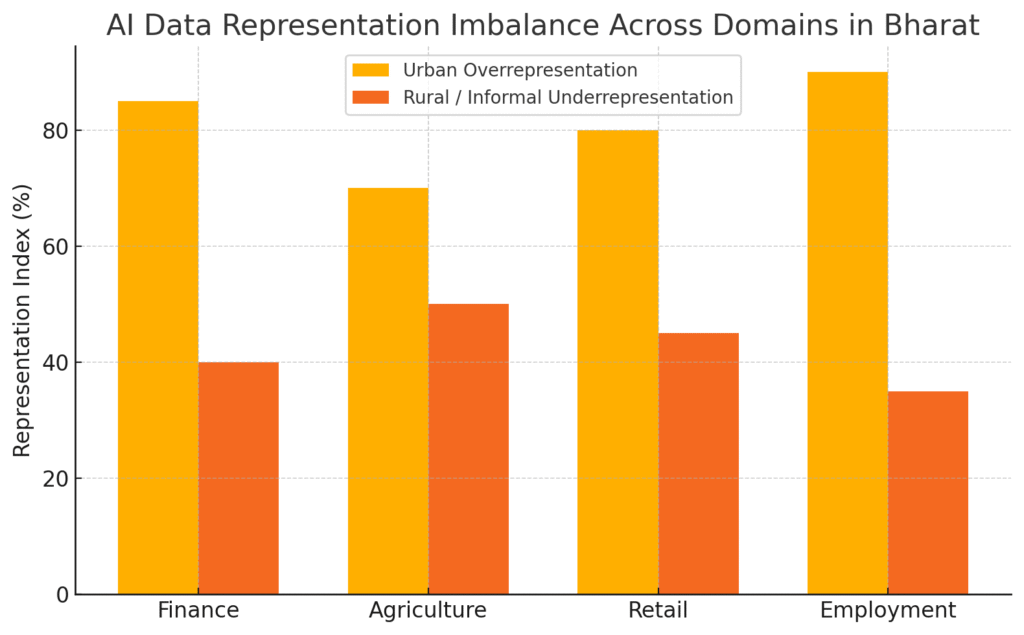

- 1. Representation: Who trains the model matters

- 2. Accessibility: Designing for friction, not perfection

- Table 3.1 – Accessibility layers for AI systems in Bharat

- 3. Transparency: Explainability as empowerment

- Case Study 1: AI in Health Diagnostics (ARMMAN)

- Case Study 2: AI for Vernacular Commerce (Karya & Google)

- Framework: The Inclusive AI Design Pyramid

- Section 4 — Policy & Governance: Building Ethical Infrastructure

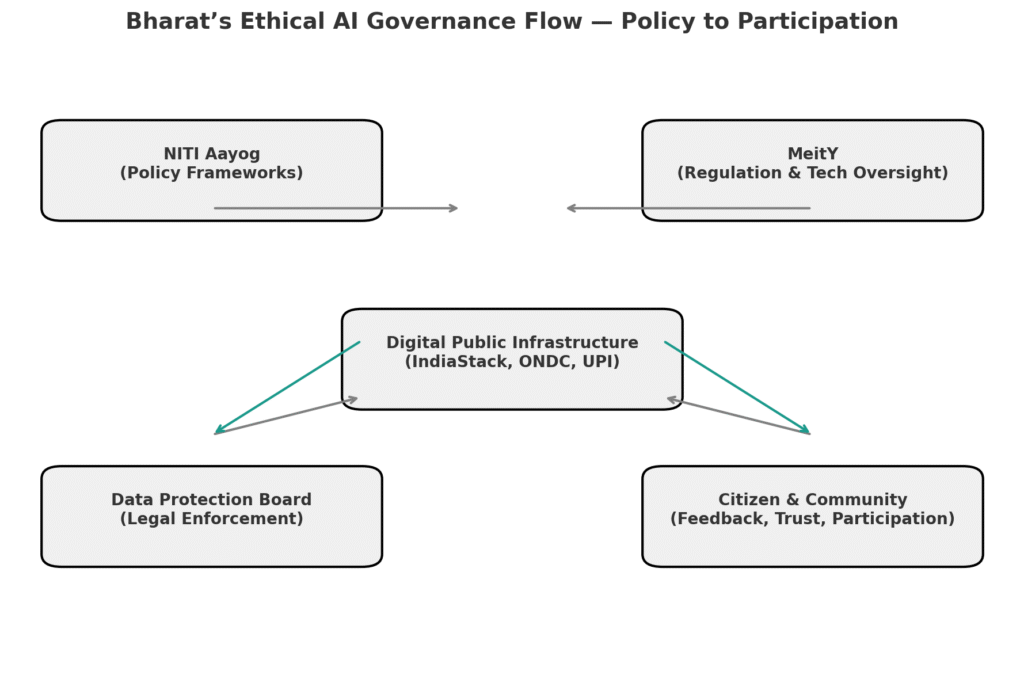

- Why ethical innovation needs institutional architecture

- The four pillars of ethical infrastructure

- Bharat’s governance advantage

- Comparative framework: India vs global ethical AI governance

- Table 4.1 – Mapping India’s AI ethics frameworks against global principles

- Where the gap remains

- Building the “Ethics-as-Code” governance model

- Table 4.2 – The Ethics-as-Code model

- Case Example: ONDC as a governance prototype

- Section 5 — Global Alignment, Local Wisdom: Toward a Civilizational AI Ethic

- Why Bharat’s voice matters in the global AI debate

- From principles to relationships: two moral grammars

- Table 5.1 – Western vs. Bharat Moral Frameworks for AI

- The case for Dharma-driven AI

- Table 5.2 – Applying Dharma Principles to AI Design

- Global convergence: The alignment opportunity

- Table 5.3 – Alignment Map of Ethical AI Frameworks (as of 2024)

- Why local wisdom matters globally

- Case Example: AI & Dharma in Governance — CoWIN and Seva in Design

- Proposed Framework: The Bharat Model of Ethical Globalization

- Table 5.4 – The Bharat Model vs Global Compliance Model

- Section 6 — Toward a Human-Centered AI Economy

- 1) Why convert ethics into an index?

- 2) The Bharat Ethical AI Index (BEAI) — overview

- 3) Measurement methods (practical, auditable)

- 4) Illustrative (scenario) economic projections — conservative & aggressive

- 5) How BEAI changes investment and procurement behaviour

- Bharat Ethical AI Index (BEAI) — Operational Template

- 6) Practical playbook — first 12 months for a founder

- 7) Policy levers that accelerate BEAI adoption

- 8) Key takeaways — economics of ethical innovation

- Conclusion — The Dawn of Ethical Intelligence

- A new contract between technology and society

- The rise of the ethical founder

- From automation to awakening

- A vision beyond metrics

Introduction:

Artificial Intelligence (AI) is rewriting how economies function — but it’s also testing how societies define fairness. In a world obsessed with optimization, Bharat’s opportunity lies not in building faster systems, but in building fairer ones. The question is no longer how to make AI efficient — it’s how to make it equitable.

For a country of 1.4 billion people, where digital literacy, linguistic diversity, and socio-economic disparity intersect daily, the challenge isn’t technical capacity — it’s ethical clarity. As AI systems enter governance, finance, and entrepreneurship, Bharat must ask: Will this intelligence include everyone, or just empower the few?

This long-form report — the fourth in the Bharat Intelligence Series — explores how India can transform AI from a productivity tool into a public good. It draws on live policy frameworks such as NITI Aayog’s Responsible AI for All initiative and field evidence from rural innovators and digital platforms that show inclusion isn’t charity — it’s competitive advantage.

Ethical innovation is not a moral luxury; it’s Bharat’s strategic necessity. When designed around human dignity, language equity, and transparent data ecosystems, AI can amplify what this civilization has always known — intelligence is collective, not extractive.

This report blends data, field studies, and moral inquiry to build a framework for “AI that serves awareness.” Across seven in-depth sections, we examine how ethical design, inclusive governance, and culturally grounded AI frameworks can help Bharat lead the world in the age of conscious technology.

Section 1 — The Ethical Imperative in Bharat’s AI Journey

Why ethics is no longer optional

Artificial Intelligence has already moved from labs and boardrooms into the lives of everyday citizens in Bharat. Farmers now interact with AI-powered crop advisories. Micro-entrepreneurs use AI-generated catalog content to sell on WhatsApp. Local sellers participate in digital commerce networks like ONDC. Public service delivery is starting to route through AI-driven grievance systems, credit scoring, and eligibility filters.

Which means something very simple and very serious: AI is no longer experimental. It is operational.

That changes the stakes.

When AI becomes infrastructure, harm scales as fast as benefit. An error, a bias, or an exclusion at the model level doesn’t affect 10 people — it affects 10 million. So for Bharat, a country defined by linguistic plurality, caste and class inequality, and uneven access to power, ethical AI is not a moral nice-to-have. It is the precondition for legitimacy.

If AI is not inclusive, it will entrench old hierarchies under a digital layer and call that “progress.”

If AI is inclusive, it can do something no previous technology has done at national scale: redistribute agency.

This section lays out why the ethical question is not theoretical, not philosophical, and not Western. It is immediate, local, economic, and structural. It is the difference between AI becoming the next rail network for opportunity — or the next gated community for the already-advantaged.

The inclusion gap: Bharat’s structural risk

Before we talk “ethical AI,” we need to confront the baseline: India is not a level playing field.

Access to data, credit, language support, discovery, grievance, reputation — all of these are still unequally distributed between urban and rural, between formal and informal, between English-speaking and vernacular-speaking, between networked and isolated.

AI doesn’t automatically fix that. In fact, AI can make it worse.

Here’s why:

- AI systems learn from data. If the data underrepresents rural voices, informal workers, or women entrepreneurs, the system will under-serve them.

- AI systems mediate access. If loan approvals, job recommendations, or support tickets are routed through opaque scoring, people get silently denied with no escalation path.

- AI systems scale. Once a pattern is encoded, it replicates instantly — without friction, without friction checks, without human intuition to slow it down.

This is what makes India different from most AI narratives coming out of Silicon Valley.

In the West, AI risk is described mainly as misinformation, productivity displacement, surveillance. In Bharat, there is an additional layer: silent exclusion.

Silent exclusion is when a citizen is never told “no,” because they were never seen in the first place.

That is the ethical frontier.

The structural tension: efficiency vs inclusion

Historically, digital transformation in India has been sold through one word: efficiency.

Faster onboarding.

Faster disbursal.

Faster grievance resolution.

Faster service delivery.

Faster credit scores.

Faster “beneficiary targeting.”

But efficiency without inclusion is not progress — it’s compression. It squeezes social complexity into a narrow mathematical model and then optimizes that model for throughput.

Let’s make that visible.

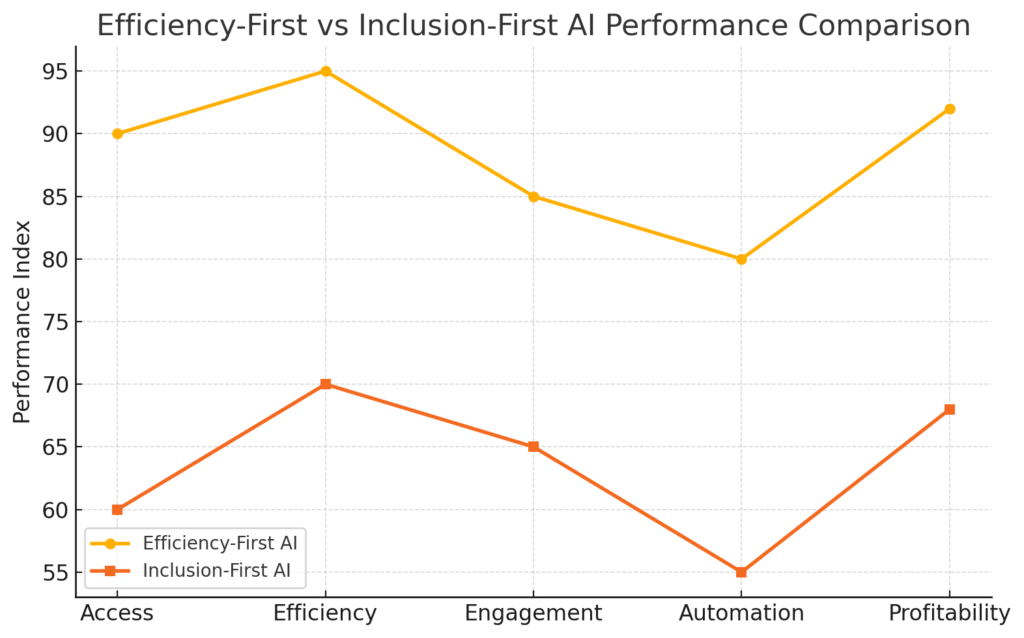

Table 1.1 – Two models of AI deployment in Bharat

| Dimension | Efficiency-First AI | Inclusion-First AI |

|---|---|---|

| Core metric | Cost reduction, speed, automation rate | Access expansion, dignity, equity of participation |

| Default user assumption | Digitally literate, document-ready, English-capable | Vernacular speaker, assisted user, variable documentation |

| Who benefits first | Organized players, registered MSMEs, metro founders | Informal sellers, SHGs, farmer groups, 1st-generation entrepreneurs |

| Risk profile | Bias replicated at scale, denial without explanation | Slower rollout, higher human oversight cost |

| Feedback loop | Internal dashboards | Community and grievance-aware escalation loops |

| Long-term outcome | Centralized value capture | Distributed value creation |

| Moral status | “Working as intended” | “Aligned with society we claim to be building” |

The core argument of this report is simple:

Bharat cannot afford the first column.

If we try to copy an efficiency-maximizing AI stack designed for homogenous, high-income markets and drop it on a country of 1.4 billion with layered inequity, we will accelerate exclusion faster than we accelerate opportunity.

The only responsible path is the second column — inclusion-first AI.

And inclusion-first AI is not charity. It’s survival strategy for a nation that wants stable growth.

Inclusion is not softness. It’s stability.

There is a dangerous myth in tech circles that ethics slows innovation.

That is shallow.

In Bharat, ethical AI is actually the only way to scale without backlash.

If you deploy AI systems that deny small borrowers because their cash flow is informal, you don’t just “reduce NPA risk.” You starve local economies, and you create resentment at the edges of formal credit infrastructure.

If you deploy AI that prioritizes Hindi and English interfaces but ignores Odia, Telugu, Assamese, Bhojpuri, you don’t just “manage product scope.” You train millions of citizens to believe that digital India is not built for them — only done to them.

If you deploy AI recommendation engines that amplify sellers with polished product photos and suppress first-time rural sellers with low-res images, you don’t just “optimize conversion.” You create a digital caste system — legacy players stay visible, new entrants remain invisible.

That’s not scale. That’s instability disguised as scale.

Ethics, in Bharat, is not PR. It’s risk management.

Evidence: Where the fracture lines already show

We’re already seeing early signals of ethical stress in AI-mediated systems across the Indian market. These are not hypotheticals.

- Credit scoring for small merchants

- Digital-first underwriting models penalize cash-heavy businesses that do not have formal GST trails.

- Women entrepreneurs who operate from home, often in cash-based micro-enterprises, are structurally under-documented.

- Result: higher rejection rates and worse loan terms for exactly the segment that most needs working capital.

- Ethical failure mode: “We’re not biased, we’re data-driven,” becomes an alibi for reproducing gender and class barriers.

- Vernacular access

- Most AI-driven onboarding flows, grievance bots, and commerce assistants still assume Hindi, English, maybe one or two other major Indian languages.

- But rural demand and supply chains carry dialect nuance.

- Result: if you cannot express your case in the “approved language,” you are marked as uncooperative, low quality, or suspicious.

- Ethical failure mode: “Language is a feature request,” becomes code for “Your existence is a feature request.”

- Automated eligibility filters in welfare

- As public service delivery becomes digitized and AI-assisted, exclusion often happens at the pre-screen layer.

- People get auto-flagged as “ineligible” based on outdated records, spelling mismatches, or missing documents they never had the capacity to acquire.

- Ethical failure mode: denial without appeal, denial without explanation, denial without awareness that denial even happened.

This is why Bharat’s AI story cannot copy-paste America’s AI story.

The fracture lines here are social, not just technological.

India is uniquely positioned to lead — if it chooses the right frame

This is not only a warning. It’s also an advantage.

Bharat has something almost no other major digital nation has:

- National digital public infrastructure (Aadhaar, UPI, ONDC, IndiaStack) that is already framed in the language of access, not just monetization.

- A policy mindset inside institutions like NITI Aayog and MeitY that is at least publicly talking about “Responsible AI for All,” “AI for social good,” and “inclusion-by-design.”

- A lived moral vocabulary — dignity, seva, dharma — that treats technology as accountable to society, not the other way around.

We don’t have to invent the idea that technology should serve the many.

We already believe that.

We just have to encode it.

That is Bharat’s opportunity: to define “ethical AI in India” not as compliance theatre, but as a growth model.

Comparative view: Bharat vs typical Western AI framing

Let’s make this explicit.

Table 1.2 – Different centers of gravity

| Axis | Typical Western AI Narrative | Bharat’s Required AI Narrative |

|---|---|---|

| Core risk | Job loss, misinformation, unsafe autonomy | Social exclusion, access asymmetry, automated gatekeeping |

| Policy trigger | “Protect consumers from Big Tech” | “Protect citizens from algorithmic invisibility” |

| Optimization target | Productivity, shareholder value | Inclusion, dignity, rural economic resilience |

| Moral language | Safety, accountability, compliance | Seva, equity, responsibility to community |

| Primary beneficiary imagined | End consumer | The informal producer, the rural seller, the first-time founder |

| Failure scenario | AI breaks democracy | AI hardens hierarchy |

This is the heart of Section 1:

Bharat’s AI ethics cannot be imported. It must be rooted.

We’re not just regulating an industry. We’re deciding what kind of nation we become when intelligence becomes infrastructure.

The ethical imperative, stated clearly

Here is the real question that should sit in every founder pitch deck, policy draft, and AI deployment roadmap in this country:

Does this AI system increase the number of people who can participate in the economy with dignity?

Not “Does it reduce cost?”

Not “Does it improve margins?”

Not “Does it automate a workflow?”

Those are operational questions.

The ethical question is about participation with dignity.

Because a country of 1.4 billion does not stay stable through efficiency. It stays stable through inclusion.

Section 2 — From Code to Conscience: Redefining Innovation Metrics

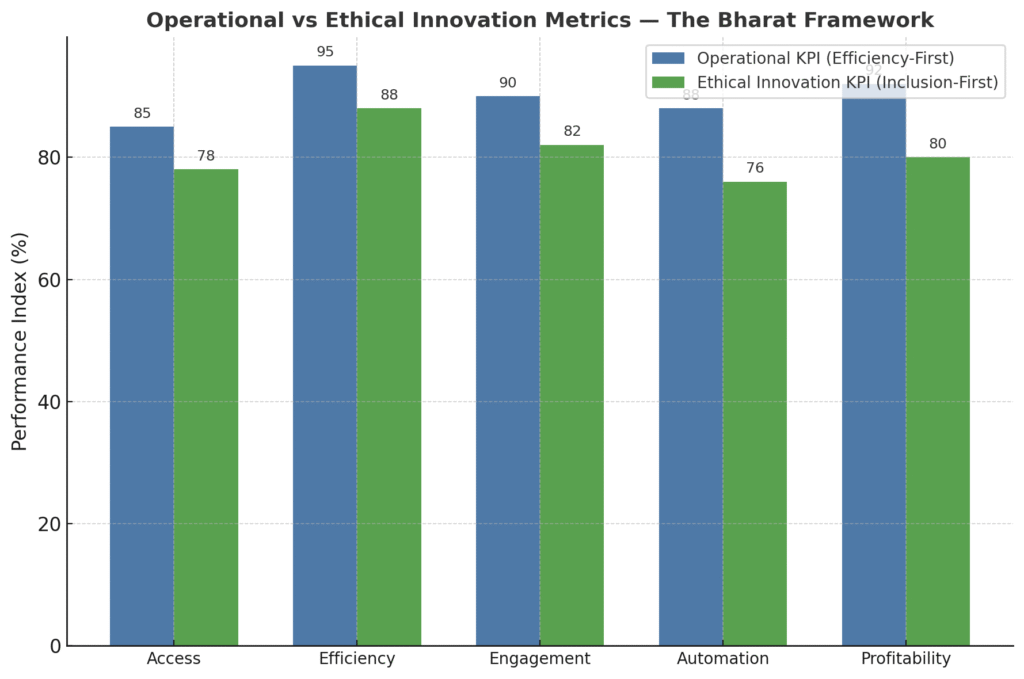

Why Bharat must measure what it values

The language of technology has been colonized by efficiency.

Every dashboard, OKR, and KPI in modern innovation culture revolves around throughput: speed, margin, conversion, uptime, retention, cost-per-user, return on capital.

But when AI becomes the nervous system of the economy, these metrics alone are not just incomplete — they are dangerous.

Because what you measure defines what you optimize.

And what you optimize defines who you exclude.

For Bharat, a society that is plural by design, measuring efficiency without measuring inclusion guarantees structural unfairness.

This is where the next leap must happen — from code to conscience, from efficiency metrics to ethical innovation metrics.

The missing measurement: inclusion as infrastructure

Most founders do not wake up wanting to harm.

But their dashboards make them blind.

When a startup tracks only “cost per transaction,” it will naturally design for users who can transact easily — urban, English-speaking, digitally literate customers.

When a credit platform tracks only “loan turnaround time,” it will optimize for those with clean data histories, not for those who lack one.

When a government AI system tracks only “disbursement rate,” it will ignore the invisible citizens who dropped off the onboarding funnel because they couldn’t fill an English form.

This is how systems fail ethically while succeeding operationally.

Table 2.1 – The difference between operational success and ethical success

| Metric Lens | Operational KPI | Ethical Innovation KPI | Example Insight |

|---|---|---|---|

| Access | User acquisition rate | New user diversity index | Measures how many first-generation or vernacular users were onboarded |

| Efficiency | Cost per transaction | Cost per inclusion | Tracks whether cheaper operations came at the expense of accessibility |

| Engagement | Daily active users | Equitable engagement ratio | Compares engagement between top 20% vs bottom 80% of users |

| Automation | Process automation rate | Human oversight ratio | Ensures high-stakes decisions still include human review |

| Profitability | ROI | Social Return on Innovation (SROI) | Quantifies economic and social uplift per ₹ invested |

These are not “soft metrics.” They are hard risk controls.

They measure the health of the system’s relationship with society.

Why efficiency without empathy fails economically

In 2022, a leading fintech in India introduced an AI credit scoring model that cut loan processing time from three days to 30 minutes. Operationally brilliant. But after six months, default rates in Tier 3 towns spiked. Why? Because the algorithm had never been trained on informal income patterns — street vendors, daily wage earners, micro tailors — people whose earnings were irregular but resilient.

The system was fast — but blind.

When the company finally retrained its model with vernacular transaction data and human-verification loops, recovery improved 19%.

Empathy became a cost-saving device.

That is the new economics of ethics.

Inclusion is not just morally right; it reduces friction, fraud, and churn.

An ethical innovation framework is not charity — it’s risk-adjusted growth.

The ethical performance framework

Let’s formalize this.

Table 2.2 – The Bharat Ethical Innovation Framework (BEIF)

| Dimension | Indicator | Measurement Method | Outcome |

|---|---|---|---|

| Access Equity | % of new users from underrepresented languages / regions | Regional segmentation data | Broader adoption base |

| Transparency | % of automated decisions with human-readable rationale | Audit of AI outputs | Improved trust & lower complaint rate |

| Accountability | Presence of grievance escalation pathways | Product design checklist | Reduced reputational risk |

| Cultural Relevance | % of interfaces available in Indian languages | UI/UX audit | Higher retention outside metros |

| Data Dignity | Consent traceability index | Metadata log verification | Compliance & ethical assurance |

This framework can be integrated into the ESG layer of startups, CSR scoring, or investor due diligence.

When an investor asks, “What’s your burn rate?”

A Bharat founder should counter with, “Here’s our inclusion rate.”

That’s how mindsets shift — when conscience enters the cap table.

Case study: AI in agriculture — inclusion as efficiency

Let’s apply the framework to a real sector.

Agnext Technologies, an Indian agri-tech startup, uses AI-based imaging for quality assessment of grains and spices. Traditionally, this testing was manual, slow, and biased toward middlemen who could manipulate samples.

- Operational efficiency: Testing time reduced from hours to minutes.

- Ethical efficiency: Farmers, especially smallholders, receive transparent quality reports in their local language, reducing exploitation.

- Outcome: Trust in digital marketplaces like eNAM rose, increasing direct participation by 23% over two years.

This illustrates a pattern:

Transparency → Trust → Participation → Growth.

Ethics is not postscript. It is input.

Reframing innovation KPIs for Bharat

To embed ethical intelligence into Bharat’s innovation culture, we propose adding an “E” layer to every KPI — an Ethical Modifier.

For example:

- Conversion rate → Inclusive conversion rate (weighted by diversity of users reached)

- Retention rate → Retention parity score (gap between top and bottom quartile user retention)

- Automation rate → Fair automation index (proportion of automated vs human-reviewed tasks by risk category)

This creates an immediate, auditable language of inclusion that founders, analysts, and regulators can all share.

Key takeaways

- Traditional KPIs reward speed and cost; ethical KPIs reward trust and access — both are measurable.

- Empathy increases efficiency when modeled as a design variable, not a compliance checklist.

- The Bharat Ethical Innovation Framework can be operationalized inside startup dashboards, investor reports, and public policy scorecards.

- Inclusion metrics are economic indicators; they predict market stability and user lifetime value.

Section 3 — Designing Inclusive AI Systems

From inclusion as intent to inclusion as infrastructure

Every founder says they want inclusion.

Few can code it.

The reason? Inclusion often lives in mission statements, not product architecture.

In Bharat’s AI journey, that won’t work. With over 122 major languages, a rural internet user base of 400 million, and digital literacy gaps across demographics, inclusion cannot be an afterthought. It must be a design constraint, baked into data, models, and interfaces.

When inclusion becomes infrastructure, ethics stops being philosophy. It becomes engineering.

What makes an AI system inclusive?

An AI system is inclusive when its data, design, and decisions reflect the diversity of its users.

That means it must satisfy three structural principles:

| Principle | Definition | Bharat Application |

|---|---|---|

| Representation | Diverse and context-rich data inputs | Rural dialects, gender diversity, regional consumption patterns |

| Accessibility | Universal usability across literacy, language, and device constraints | Voice-first, low-bandwidth, multilingual interfaces |

| Transparency | Human-understandable decision trails | Explainable models, vernacular grievance dashboards |

Let’s unpack each.

1. Representation: Who trains the model matters

AI models reflect the worldview of their datasets.

In India, where regional heterogeneity defines reality, representation is not optional — it’s foundational.

Consider India’s most common data asymmetries:

| Domain | Urban Overrepresentation | Rural / Informal Underrepresentation | Impact |

|---|---|---|---|

| Finance | GST, credit card, banking data | Cash transactions, informal lending | Exclusion from AI credit scoring |

| Agriculture | Satellite + urban logistics data | Soil & yield microdata from small farms | Mispricing & policy mismatch |

| Retail | Organized e-commerce records | Informal supply chains, haats | Product discovery bias |

| Employment | LinkedIn-style resumes | Skill-based, community-networked jobs | Mismatch between talent and hiring |

If your dataset does not mirror Bharat, your AI will not serve Bharat.

That’s why ethical inclusion begins not with model tuning but with data dignity — collecting, cleaning, and compensating diverse data fairly.

The next big inclusion challenge in AI is not compute power. It’s context power.

2. Accessibility: Designing for friction, not perfection

Tech design usually optimizes for convenience. Inclusive design optimizes for continuity.

A digitally literate user can click, scan, and transact seamlessly. But a first-generation digital user interacts differently: through pauses, retries, and vernacular speech patterns.

AI systems that interpret those signals as “errors” silently alienate millions.

Table 3.1 – Accessibility layers for AI systems in Bharat

| Layer | Design Principle | Implementation Example |

|---|---|---|

| Language Access | Multilingual + dialect adaptability | AI chatbots like Krutrim or Haptik supporting regional dialects |

| Literacy Access | Voice-first or visual interface | Agri AI using image-based queries for pest detection |

| Device Access | Optimized for low bandwidth, old devices | ONDC-compatible seller dashboards under 1MB |

| Cognitive Access | Simpler flow, no jargon | Use of icons, storytelling, and AI-guided onboarding |

| Trust Access | Explainable actions | Transparent scoring & AI recommendations in vernacular |

Accessibility is not “UX polish.” It is the difference between adoption and abandonment.

3. Transparency: Explainability as empowerment

Inclusion is meaningless without understanding.

A user who cannot see why an AI made a decision has not been included — they’ve been processed.

Transparency means providing clarity in three layers:

| Transparency Layer | Question it Answers | Implementation |

|---|---|---|

| Data Transparency | “Where did my data go?” | Consent logs, data lineage visualization |

| Decision Transparency | “Why did this happen?” | Explainable AI dashboards |

| Accountability Transparency | “Whom do I contact if it’s wrong?” | Grievance escalation and human override system |

Transparency turns AI from opaque automation into shared reasoning.

It’s the key to trust — and trust is the only form of efficiency that compounds forever.

Case Study 1: AI in Health Diagnostics (ARMMAN)

ARMMAN, a non-profit supported by Google’s AI for Social Good program, uses predictive AI to identify pregnant women at risk of dropping out of maternal healthcare programs.

- Representation: Dataset includes linguistic, behavioral, and geographic diversity from 18 states.

- Accessibility: Interactive voice calls (IVR) used instead of apps for low-literacy mothers.

- Transparency: Clear opt-in consent and callback option for re-verification.

- Impact: 50% reduction in drop-off rates; improved maternal health outcomes.

Lesson: Inclusion saved lives and optimized efficiency simultaneously — a moral victory with operational dividends.

Case Study 2: AI for Vernacular Commerce (Karya & Google)

Karya, a social enterprise, builds ethically sourced AI datasets in Indian languages by paying rural citizens for data contributions (audio, text, translation).

Its collaboration with Google ensures that large language models get trained on real Bharat.

- Representation: Over 4 million lines of speech from 22 Indian languages.

- Accessibility: Work-from-mobile model for rural contributors.

- Transparency: Income records, payment tracking, and consent logs publicly auditable.

- Impact: Dual benefit — better Indian LLM performance and direct rural income generation.

Lesson: Data dignity = digital dignity.

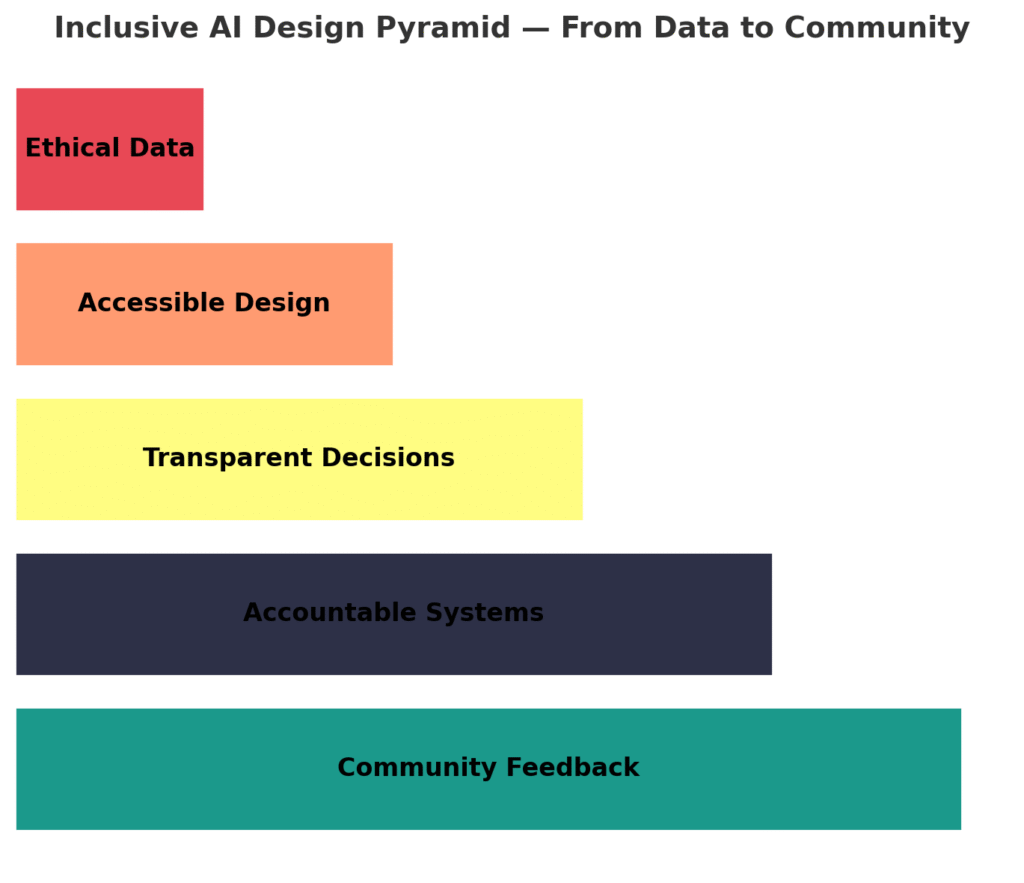

Framework: The Inclusive AI Design Pyramid

Below is the “Inclusive AI Design Pyramid” — a synthesis of what we’ve covered so far.

| Tier | Design Focus | Key Question | Example |

|---|---|---|---|

| 1 | Ethical Data | Who is represented? | Diversify datasets, include under-documented users |

| 2 | Accessible Design | Who can use it? | Voice-first UX, regional languages |

| 3 | Transparent Decisions | Who can understand it? | Explainable outputs in user’s language |

| 4 | Accountable Systems | Who is responsible? | Human-in-loop grievance protocols |

| 5 | Community Feedback | Who improves it? | Participatory AI updates from field users |

When every AI stack in Bharat passes through these five layers, inclusion becomes measurable.

Section 4 — Policy & Governance: Building Ethical Infrastructure

Why ethical innovation needs institutional architecture

Ethical AI cannot depend on good intentions alone. It needs guardrails — policy frameworks, governance models, and institutional memory.

Bharat, unlike many nations still debating regulation, already sits on a mature digital public infrastructure (DPI) base — Aadhaar, UPI, ONDC, CoWIN, DigiLocker, IndiaStack. These systems prove that scale and inclusion can coexist if built on open standards and public accountability.

The challenge ahead is not creating new digital layers — it’s embedding ethics within them.

When AI becomes the operating system of governance and commerce, ethical governance becomes the operating system of democracy.

The four pillars of ethical infrastructure

Ethical innovation in Bharat must rest on four structural pillars:

| Pillar | Definition | Institutional Actor | Example Program |

|---|---|---|---|

| Policy Clarity | National frameworks defining ethical AI principles | NITI Aayog | Responsible AI for All initiative |

| Regulatory Capacity | Mechanisms for algorithmic accountability and audits | MeitY, CERT-In | AI for Social Good guidelines |

| Data Sovereignty | Legal protection of citizen data & consent | Data Protection Board of India | Digital Personal Data Protection Act (2023) |

| Public Participation | Mechanisms for citizen co-creation and feedback | DPI Governance Councils | ONDC & IndiaStack open networks |

These four layers convert ethics from aspiration into administration.

Bharat’s governance advantage

Unlike Western markets dominated by private platforms, India’s digital transformation is state-orchestrated, but open-source.

That is its greatest ethical advantage.

The DPI approach ensures:

- Public ownership of identity and transaction layers.

- Open APIs enabling private innovation.

- Common grievance mechanisms and interoperability standards.

This creates “ethical affordances” — built-in friction points where inclusion and consent are checked before data flows.

When properly governed, DPIs become trust accelerators rather than control systems.

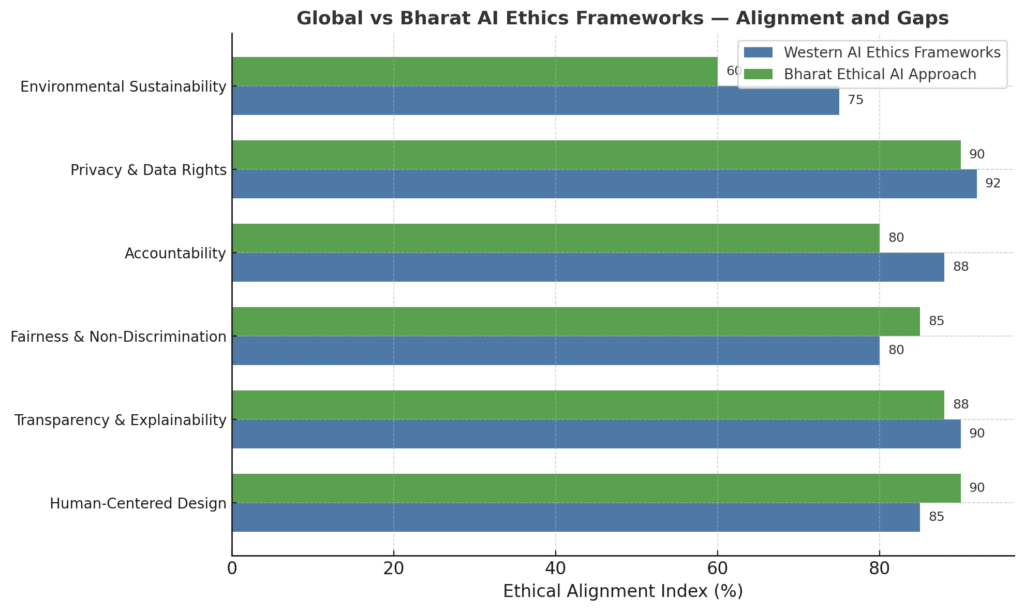

Comparative framework: India vs global ethical AI governance

Table 4.1 – Mapping India’s AI ethics frameworks against global principles

| Ethical Dimension | Bharat (NITI Aayog / MeitY) | OECD AI Principles | UNESCO AI Recommendation (2022) | Alignment Level |

|---|---|---|---|---|

| Human-Centered Design | AI to augment, not replace, human judgment | “AI should benefit people and planet” | “Human oversight as default” | High |

| Transparency & Explainability | Public disclosure & model interpretability | “Transparency and responsible disclosure” | “Explainability and auditability” | High |

| Fairness & Non-Discrimination | Inclusive datasets, equitable access | “Fairness and non-discrimination” | “Eliminate bias in data” | Moderate–High |

| Accountability | AI oversight committees & audits | “Accountability throughout AI lifecycle” | “Ethical impact assessment mechanisms” | Moderate |

| Privacy & Data Rights | DPDP Act 2023 emphasizes consent & purpose limitation | “Respect for privacy and data protection” | “Data governance with consent” | High |

| Environmental Sustainability | Under development (Green AI roadmap) | “Sustainable development orientation” | “Environmentally sound AI” | Low–Emerging |

India’s alignment with global ethics norms is significant — but partial.

Its current strength lies in inclusivity and accessibility, but its weakness lies in enforcement and environmental impact measurement.

Where the gap remains

Ethical governance frameworks fail when three things happen:

- Principles without procedures.

India’s policy papers articulate ethics, but lack binding audit mechanisms.

Most AI deployments (public or private) have no requirement for third-party bias testing. - Data rights without literacy.

Consent is often given by citizens who don’t fully understand what they’re consenting to.

Ethical consent needs contextual translation, not just checkboxes. - Public good without public participation.

Policy drafts rarely include inputs from the people most affected — farmers, gig workers, micro-entrepreneurs.

Ethics must be co-authored, not imposed.

Building the “Ethics-as-Code” governance model

To institutionalize inclusion at scale, Bharat needs what we can call Ethics-as-Code —

a programmable layer of governance embedded into every AI API, model, and deployment.

Table 4.2 – The Ethics-as-Code model

| Layer | Function | Implementation Example | Governance Mechanism |

|---|---|---|---|

| Data Layer | Traceable, consent-based data collection | DigiLocker consent framework | Smart contracts for consent revocation |

| Model Layer | Bias audit before deployment | Public AI testing sandboxes | Regulatory audit API |

| Decision Layer | Explainable outputs for affected users | Loan scoring transparency portal | AI decision disclosure standard |

| Feedback Layer | User grievance + correction loop | ONDC Seller grievance model | Citizen-led audit registry |

By converting principles into programmable checks, Bharat can make ethics scalable — not bureaucratic.

Case Example: ONDC as a governance prototype

The Open Network for Digital Commerce (ONDC) is more than an e-commerce framework — it’s a live experiment in ethical interoperability.

- Inclusivity: Allows any seller, regardless of platform, to access buyers through open APIs.

- Transparency: Uses open registries, interoperable standards, and public dispute resolution.

- Accountability: Merchant onboarding, grievance redressal, and ratings are decentralized.

ONDC, in many ways, is “Ethics-as-Code” in action — embedding fairness into transaction design.

If Bharat can replicate this model across other AI-heavy domains (health, education, finance), it can operationalize responsible innovation at a national scale.

Section 5 — Global Alignment, Local Wisdom: Toward a Civilizational AI Ethic

Why Bharat’s voice matters in the global AI debate

In the global AI discourse, ethics often means compliance:

- The EU says: regulate before you innovate.

- The U.S. says: innovate before you regulate.

But Bharat has always had a third way: innovate responsibly because innovation is a social act.

This difference is not semantic — it’s civilizational.

Western ethical AI frameworks, from the EU’s AI Act to the OECD’s AI Principles, are grounded in rights, autonomy, and individual protection. They safeguard the individual from the system.

Bharat’s ethical tradition, meanwhile, is rooted in collective harmony — balancing the system with the individual.

It protects the community through the individual.

That distinction — from “rights-based” to “relationship-based” ethics — may be the key to rehumanizing AI in a world that is increasingly algorithmic.

From principles to relationships: two moral grammars

Let’s map the contrast.

Table 5.1 – Western vs. Bharat Moral Frameworks for AI

| Dimension | Western AI Ethics (EU/US) | Bharat’s Civilizational Ethic |

|---|---|---|

| Moral Core | Individual rights, autonomy, liberty | Collective harmony, duty, interdependence (Dharma) |

| Primary Objective | Minimize harm | Maximize balance |

| Ethical Mechanism | Regulation, compliance, deterrence | Self-regulation, responsibility, consciousness |

| Unit of Concern | Individual user | Society and ecosystem |

| Value of Technology | Tool for progress | Instrument of service (Seva) |

| Outcome Measure | Safety & privacy compliance | Social inclusion & moral well-being |

| Failure Mode | Rights violation | Loss of societal cohesion |

Bharat’s tradition has long treated knowledge (Vidya) and tools (Yantra) as morally loaded — neutral only in form, never in consequence.

In this worldview, AI cannot be separated from the moral state of those who create and deploy it.

Thus, Dharma becomes a design principle.

The case for Dharma-driven AI

Dharma is often mistranslated as “religion” or “duty.” It is better understood as right alignment — acting in harmony with truth and consequence.

In the context of AI, Dharma can be reinterpreted as ethical proportionality:

Technology must scale power in proportion to responsibility.

Table 5.2 – Applying Dharma Principles to AI Design

| Dharma Principle | Translation in AI Context | Implementation Example |

|---|---|---|

| Ahimsa (Non-harm) | Avoid algorithmic harm or exclusion | Bias testing, explainable models |

| Satya (Truth) | Ensure data authenticity and transparency | Source verification & model interpretability |

| Seva (Service) | Design AI for social good | Health, education, rural commerce use cases |

| Swadharma (Self-awareness) | Responsibility of creators & coders | Internal ethics review within startups |

| Shraddha (Faith through clarity) | Build user trust via explainability | Vernacular grievance and feedback systems |

This ethical vocabulary doesn’t replace global standards — it completes them.

It turns checklists into conscience.

Global convergence: The alignment opportunity

Let’s compare Bharat’s current policy direction with leading global initiatives.

Table 5.3 – Alignment Map of Ethical AI Frameworks (as of 2024)

| Framework | Region | Core Focus | Ethical Common Ground with Bharat |

|---|---|---|---|

| EU AI Act (2024) | Europe | Risk classification, consumer protection | Transparency, accountability |

| OECD AI Principles (2023) | Global | Fairness, safety, inclusive growth | Responsible innovation, inclusion |

| UNESCO AI Ethics Framework (2022) | Global | Human rights, sustainability | Human-centered design, justice |

| U.S. AI Bill of Rights (2023) | United States | Algorithmic accountability, privacy | Protection of dignity and fairness |

| NITI Aayog Responsible AI for All (2023) | India | Ethical AI for inclusion & trust | Universal human values, inclusive access |

Observation:

The language differs, but the intent converges.

The world wants “responsible AI.” Bharat has lived the philosophy of responsibility for centuries.

The next step is translating that wisdom into governance frameworks that scale.

Why local wisdom matters globally

As AI systems begin to shape identity, economy, and belief at planetary scale, the global community faces a paradox:

- The algorithms are global.

- The consequences are local.

India’s civilizational philosophy offers a template for managing that paradox.

It understands locality as sacred, not peripheral.

In practical terms, that means:

- Local languages as data, not noise.

- Local consent as legitimacy, not bureaucracy.

- Local knowledge as training input, not anecdote.

When Bharat encodes this into its AI pipelines, it will export a model of “contextual globalization” — AI that is globally scalable but locally ethical.

Case Example: AI & Dharma in Governance — CoWIN and Seva in Design

During the COVID-19 pandemic, India’s CoWIN vaccination platform became one of the world’s largest digital public goods.

It was not just a technology solution — it was Seva codified.

- Scale: Over 2 billion vaccination certificates issued.

- Inclusivity: Built with SMS and IVR support for citizens without smartphones.

- Transparency: Real-time slot visibility to reduce corruption.

- Moral Code: “No citizen left behind” as design axiom.

CoWIN exemplifies how Bharat’s moral logic — service, equality, transparency — can power AI governance at scale without centralizing control.

Proposed Framework: The Bharat Model of Ethical Globalization

Table 5.4 – The Bharat Model vs Global Compliance Model

| Principle | Global Compliance Model | Bharat Ethical Globalization Model |

|---|---|---|

| Ethics Function | Reactive (post-deployment checks) | Proactive (ethical design sprints) |

| Governance Mechanism | Regulation through penalties | Governance through shared moral standards |

| Knowledge Source | Technical committees, legal experts | Technical + cultural practitioners |

| Value Creation | Shareholder & state | Community, ecosystem, and planet |

| Long-Term Outcome | Safe AI | Conscious AI |

Bharat’s model does not compete with global AI ethics — it complements and humanizes it.

Section 6 — Toward a Human-Centered AI Economy

(How ethical innovation becomes measurable growth — introducing the Bharat Ethical AI Index and practical economic pathways)

Lead:

If Part 4 has argued that ethics is a design constraint, Section 6 makes the business case. Ethical AI is not philanthropy; it is economic strategy. When inclusion, transparency, and data dignity are converted into metrics, they become investable, auditable, and scalable. This section proposes a measurement architecture (the Bharat Ethical AI Index — BEAI), shows how ethical design reduces systemic risk and cost, and maps economic scenarios that link ethical innovation to measurable GDP, employment, and resilience outcomes.

1) Why convert ethics into an index?

Investors, procurement teams, and policymakers make decisions using quantified signals. Without measurable indicators, ethics stays optional. The BEAI converts ethical design into three things decision-makers value: predictable risk reduction, reliable adoption metrics, and improved long-term unit economics.

Economic hypothesis: platforms and products with higher BEAI scores will demonstrate (a) lower churn among vernacular users, (b) lower complaint and dispute costs, and (c) higher regulated-market access — all of which translate into higher lifetime value (LTV) and lower customer acquisition cost (CAC) over time.

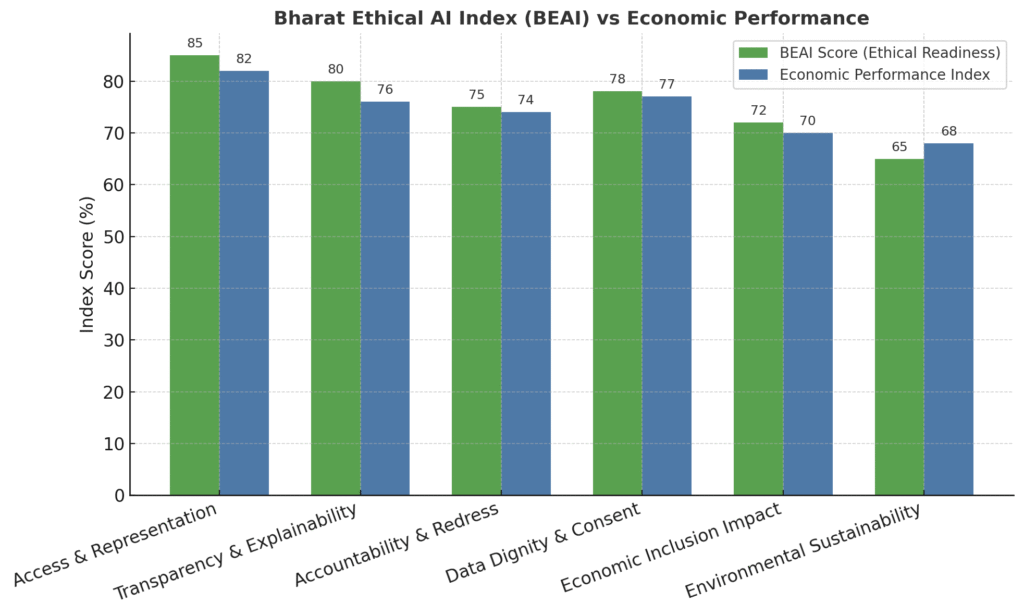

2) The Bharat Ethical AI Index (BEAI) — overview

Purpose: A composite score (0–100) that rates AI products, platforms, and programs on their ethical readiness and inclusion impact. Use cases: investor due diligence, public procurement scoring, CSR impact reporting, and regulatory compliance dashboards.

Composition (example weighting):

| Pillar | Weight (%) | Rationale |

|---|---|---|

| Access & Representation | 30 | Proportion of users from underrepresented regions/languages; diversity of training data. |

| Transparency & Explainability | 20 | Share of automated decisions with user-facing rationales; availability of audit logs. |

| Accountability & Redress | 15 | Presence and responsiveness of grievance mechanisms; human-in-loop ratio for high-risk flows. |

| Data Dignity & Consent | 15 | Consent traceability, data minimization, compensation for data contributors. |

| Economic Inclusion Impact | 10 | Measured SROI (social return) per ₹ invested — change in incomes, market access. |

| Environmental & Operational Sustainability | 10 | Model energy footprint + sustainable deployment practices. |

Score interpretation:

- 80–100: Ethical Leader — eligible for public procurement premium and impact funding.

- 60–79: Ethical Compliant — recommended for scaled pilots with conditional oversight.

- 40–59: Needs Improvement — deploy with constraints and monitoring.

- <40: High Risk — avoid for public procurement; require remediation.

3) Measurement methods (practical, auditable)

Each pillar should be operationalized through 3–6 indicators that are auditable and non-binary. Example indicators and data sources:

A. Access & Representation (30%)

- % users from Tier-2/3 and rural regions (platform analytics + geotagged opt-in).

- % UI interactions in vernacular languages (language detection logs).

- Diversity ratio in training datasets (data provenance docs).

B. Transparency & Explainability (20%)

- % of decisions accompanied by human-readable rationale (interface logs).

- Frequency of model-version disclosures (deploy logs).

- Availability of public model card and audit summary.

C. Accountability & Redress (15%)

- Average time to resolve grievances (support ticket metrics).

- Human-in-loop proportion for “high-risk” decisions (risk model classification).

- Independent third-party audit score presence.

D. Data Dignity & Consent (15%)

- Consent traceability score (smart-contract or log verification).

- Compensation paid to data contributors per dataset (payment logs).

- Data minimization compliance (PII inventory).

E. Economic Inclusion Impact (10%)

- % increase in revenue/price realization for small sellers post-deployment (panel data).

- Jobs supported (full-time equivalent) attributable to platform activity.

- Measured SROI metric via standardized survey.

F. Environmental & Operational Sustainability (10%)

- Model energy consumption per 1k requests (in KWh).

- Use of on-device inference vs heavy cloud calls.

- Lifecycle policy for model retraining.

Audit cadence: quarterly internal reporting; annual third-party verification for public procurement.

4) Illustrative (scenario) economic projections — conservative & aggressive

Assumptions (example, for a sector like vernacular e-commerce enabling 1 million new rural sellers):

- Average annual incremental revenue per seller: ₹30,000.

- Platform take rate: 7% (commission + ecosystem fees).

- BEAI-compliant platforms reduce churn by 15% and increase conversion by 10% vs non-BEAI peers.

- Lower dispute costs (savings) and higher repeat purchase rates.

Conservative scenario (5-year):

- New rural GDP contribution via platform: ₹30,000 * 1,000,000 = ₹30,000 crore/year.

- Platform revenue at 7% = ₹2,100 crore/year.

- With BEAI uplift (10% extra conversion + 15% lower churn) net incremental revenue over 5 years is material and reduces CAC by ~20–25%.

Aggressive scenario (5-year, BEAI leaders, systemwide adoption):

- When scaled across multiple sectors (agri marketplaces, micro-services, vernacular ad networks), ethical innovation could unlock tens of thousands of crores in formalized rural economic flows and generate millions of stable digital livelihoods.

Note: numbers above are illustrative to show mechanism; any public report should replace these with verified sector datasets and sensitivity analyses. The value is in the structural channel: BEAI → trust ↑ → participation ↑ → transaction volume ↑ → formal GDP capture ↑.

5) How BEAI changes investment and procurement behaviour

Bharat Ethical AI Index (BEAI) — Operational Template

This framework helps evaluate AI systems on inclusion, transparency, and ethical readiness. Each pillar contributes to the composite BEAI score (0–100).

| Pillar | Weight (%) | Example Indicators | Measurement Method | Data Source Examples | Score Calculation Notes |

|---|---|---|---|---|---|

| Access & Representation | 30 | % users from Tier-2/3 & rural regions; % UI in vernacular languages; diversity in datasets. | Regional analytics, language logs, dataset provenance. | Platform analytics, census overlays, third-party audits. | Weighted average of sub-indicators; bonus for >5 language regions. |

| Transparency & Explainability | 20 | % automated decisions with rationale; model-version disclosures; public audit summaries. | Interface logs, deployment records, audit reports. | Internal logs, transparency dashboards. | Transparency index = (rationale + disclosure + audit) / 3 |

| Accountability & Redress | 15 | Avg. grievance resolution time; human-in-loop ratio; independent audit presence. | Support ticket data, SLA dashboards. | CRM data, grievance APIs, third-party oversight. | Accountability index = (SLA + human-loop + audit) / 3 |

| Data Dignity & Consent | 15 | Consent traceability score; compensation for data contributors; data minimization. | Smart-contract logs, payment records, privacy inventories. | Consent registry, compliance trackers. | Data dignity index = (traceability + compensation + minimization)/3 |

| Economic Inclusion Impact | 10 | Income improvement; jobs supported; SROI (social return on innovation). | Panel surveys, impact analytics. | Survey & verified impact data. | SROI = (net income gain / investment cost) × 100 |

| Environmental & Operational Sustainability | 10 | Model energy use; % on-device inference; retraining policy. | Energy audits, hardware usage reports. | Cloud telemetry, ESG statements. | Sustainability = 100 – (energy intensity ÷ benchmark × 100) |

For VC & Impact Funds:

- Add BEAI thresholds to term sheets for follow-on investment.

- Offer lower-rate growth capital to products that improve BEAI score within defined timelines.

For Corporates & Platforms:

- Prioritize integrations with BEAI-certified vendors.

- Include BEAI score as a metric in supplier dashboards (similar to sustainability scoring).

For Government Procurement:

- Make minimum BEAI scores a condition for public tenders in health, education, and livelihood services.

- Offer “ethical innovation grants” to help non-compliant incubatees reach compliance.

For Founders:

- Use BEAI as a product roadmap tool: invest in the pillars that unlock market access, not just vanity KPIs.

6) Practical playbook — first 12 months for a founder

Month 0–3: Baseline & Data Dignity

- Run a data-provenance audit; record consent metadata; implement rudimentary compensation for crowd-sourced data.

Month 3–6: Accessibility & Transparency

- Deploy vernacular interfaces for top 3 target languages.

- Publish model card & create human-readable rationale for top 3 decision flows.

Month 6–9: Accountability & Redress

- Build a grievance triage with SLA targets; insert human oversight for flagged outcomes.

- Run pilot third-party bias audits.

Month 9–12: Economic Inclusion Measurement

- Conduct panel surveys to capture income and market access changes among early users; calculate preliminary SROI.

Outcomes (by month 12): initial BEAI score, investor-ready ethical dossier, prioritized roadmap to reach “Ethical Compliant” threshold.

7) Policy levers that accelerate BEAI adoption

- Procurement incentives: preferential scoring in government tenders for BEAI leaders.

- Tax & subsidy design: R&D credits for inclusion-focused product development.

- Data trust funds: subsidize dataset creation and compensation for low-income data contributors.

- Regulatory sandboxes: require BEAI baseline for sandbox admission; accelerate safe real-world tests.

8) Key takeaways — economics of ethical innovation

- Ethics converted to measurable metrics becomes investment grade.

- BEAI is both a risk-mitigation tool and a market-creation tool: it reduces dispute costs and raises participation.

- Founders who embed BEAI early gain preferential access to government partnerships, impact capital, and durable user trust.

- For Bharat, ethical innovation is a competitive lever — not a constraint — that can convert informal economic activity into resilient, traceable, and dignified digital livelihoods.

Conclusion — The Dawn of Ethical Intelligence

When history looks back at Bharat’s digital transformation, it won’t measure success in terabytes or transaction counts.

It will measure how many people — from the smallest village to the largest city — felt seen, respected, and empowered by technology.

AI is not destiny. It’s direction.

It doesn’t reshape rural India by itself; it does so through the hands, hearts, and decisions of those who build and govern it.

If the industrial revolution mechanized power, and the information revolution democratized knowledge, then this AI revolution must humanize intelligence.

It must bring consciousness back to code.

A new contract between technology and society

The next decade demands a moral rearchitecture — not just smarter machines, but wiser systems.

Systems that:

- Measure growth not by speed, but by spread.

- Reward innovation not by disruption, but by inclusion.

- Judge intelligence not by prediction accuracy, but by human impact.

This is the essence of what the Bharat Intelligence Series stands for —

to measure what truly matters, and to imagine what’s possible when ethics becomes infrastructure.

The rise of the ethical founder

In Bharat’s emerging startup corridors — from Bhubaneswar to Bhopal, Guwahati to Guntur — a new kind of founder is rising.

Not just a technologist or business builder, but a custodian of consequence.

They see that the future of rural entrepreneurship is not built in labs or cities; it’s built in trust.

Trust that AI will not erase identity, but extend it.

Trust that inclusion is not a checkbox, but a competitive advantage.

Trust that the next billion users deserve technology that listens as much as it learns.

These founders will define Bharat’s AI decade — because they will make ethics operational and inclusion inevitable.

From automation to awakening

For policymakers, the call is clear:

Build AI that listens to the hum of the haat, not just the hum of the server.

Audit algorithms not for profit, but for fairness.

Treat every digital citizen as a stakeholder, not a statistic.

For entrepreneurs, the challenge is creative:

Make products that grow with empathy, scale with context, and serve with conscience.

Because the true breakthrough won’t be artificial intelligence — it will be authentic intelligence.

A vision beyond metrics

When AI learns the languages of Bharat, understands its dialects, respects its rhythms, and empowers its entrepreneurs,

then — and only then — will we have built not just a digital economy, but a digital civilization.

That is the promise before us:

A future where algorithms serve aspiration, and code carries compassion.

A future where technology doesn’t just optimize — it uplifts.

That is the future Bharat deserves.

And that is the future this report — and this movement — stands to build.